Style transfer AI is a fascinating technology that lets you apply the artistic style of one image to the content of another. Imagine taking a photo of your city skyline and instantly reimagining it in the bold, swirling style of Vincent van Gogh’s “The Starry Night.” That’s the core magic of style transfer.

This guide will walk you through what style transfer AI is, how the technology works, its real-world applications, and how it fits into the bigger picture of creative AI.

What Is Style Transfer AI and How Does It Work?

At its heart, style transfer AI separates the content of an image from its style. It then combines the content of one image with the style of another to create a completely new, hybrid piece of art. This is much more advanced than a simple photo filter.

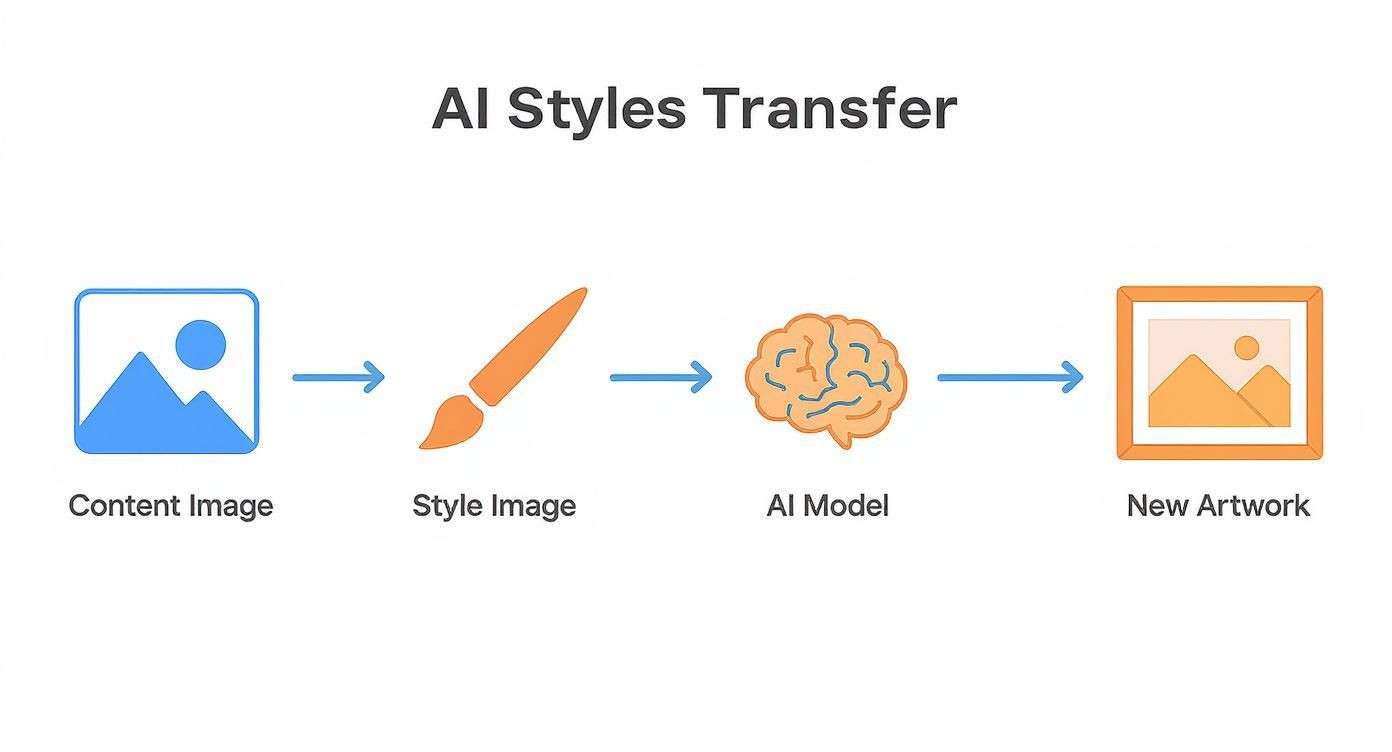

Let’s break down the process:

- Content Image: This is your starting photo—for example, a picture of your dog. The AI analyzes this image to understand its fundamental structure: the shapes, lines, and objects that define the subject. It’s the “what.”

- Style Image: This is the artwork you want to mimic, like a painting by Claude Monet. The AI studies this image not for its subject matter, but for its artistic signature—the brushstrokes, color palette, and textures. This is the “how.”

Merging Content and Style

The AI’s main task is to generate a new image from scratch. This new image must achieve two competing goals simultaneously:

- Preserve the content: The final result must still be clearly recognizable as your original photo (your dog).

- Embody the style: The new artwork must authentically capture the artistic essence of the style image (Monet’s painting).

The AI algorithm iteratively adjusts the new image, pixel by pixel, until it finds the perfect balance. The result is a unique creation: your dog, painted in the unmistakable style of an Impressionist master.

The core idea is simple: deconstruct and reconstruct. The AI breaks down images into their ‘content’ and ‘style’ components, then intelligently merges them to create something entirely new.

The Growing Demand for Creative AI

This technology has sparked immense interest across various industries. The global Style Transfer AI market, valued at around $1.8 billion, is projected to surge to $11.6 billion by 2033. This growth highlights a significant demand for AI tools that can generate unique visuals for everything from digital art to marketing.

This creative principle extends beyond images. In the audio world, AI can now generate unique soundscapes from text prompts, as seen with AI text sound effects. It’s a testament to the versatility of generative models.

The Neural Networks Behind the Magic

To understand how style transfer AI performs this artistic feat, we need to look at its engine: a Convolutional Neural Network (CNN). Inspired by the human visual cortex, CNNs are exceptionally good at recognizing patterns, shapes, and textures in images, making them ideal for this task.

A CNN processes an image in layers. The initial layers detect basic features like edges and colors. As information moves deeper into the network, the layers identify more complex elements, such as textures, shapes, and eventually, complete objects.

This infographic illustrates how the AI merges a content image and a style image.

As shown, the AI model serves as a creative hub, blending the subject of one image with the aesthetic of another.

The Balancing Act: Content Loss vs. Style Loss

How does the AI know what to change and what to keep? The process is guided by a clever balancing act between two objectives, measured by what are called “loss functions.”

Content Loss: This measures how much the generated image deviates from the original content photo. A high content loss means the subject is no longer recognizable. The AI’s goal is to minimize this loss.

Style Loss: This measures the difference between the artistic textures of the new image and the style reference image. A high style loss means the artistic flair wasn’t captured. The AI also works to minimize this loss.

At its core, style transfer AI is a complex optimization puzzle. The algorithm continuously refines the pixels of a new image, seeking the ideal point where both content and style losses are as low as possible.

Training the AI’s Artistic Eye

This entire process is powered by a pre-trained CNN. The network has already learned from a massive dataset of images, giving it a profound understanding of what constitutes “content” (objects, scenes) versus “style” (textures, color patterns).

When you provide your two images, the AI leverages this pre-existing knowledge. For the content image, it focuses on the deeper layers of the network, which are skilled at high-level object recognition. For the style image, it analyzes correlations between features across multiple layers to capture its unique texture and color DNA.

It then generates an image that satisfies both sets of criteria, creating a unique piece of art that fuses human creativity with machine precision. This is the technology that powers accessible tools like LunaBloom AI, making sophisticated AI art generation available to everyone.

Comparing Key Style Transfer Models

Not all style transfer AI models are built the same. They vary in their approach, each offering a different mix of artistic quality, processing speed, and creative flexibility.

Understanding these differences is crucial for choosing the right model for your project, whether you’re creating a single artwork or developing a real-time video application.

The Main Types of Style Transfer Models

Let’s explore the major categories of models that have evolved over time.

- Optimization-Based: These are the original models. They work by meticulously optimizing the pixels of a new image to match the content and style references. The results are often high-quality, but the process is slow.

- Feed-Forward (Per-Style-Per-Model): These models are designed for speed. A separate network is trained for a single artistic style. Once trained, it can apply that style almost instantly, making it perfect for real-time video filters. The drawback is needing a new model for every style.

- Arbitrary Style (Fast Style Transfer): This is a flexible hybrid. A single model is trained to apply any style you give it in one go. While the quality might be slightly less refined than optimization-based methods, the versatility is a major advantage.

- Lightweight Mobile: Optimized for efficiency, these models are designed to run on devices like smartphones. They prioritize speed and low memory usage over producing the highest-fidelity output.

The pioneering paper by Gatys et al. introduced the optimization-based method. Its slow performance inspired the development of these faster and more flexible alternatives.

Comparison Of Style Transfer AI Methods

This table provides a quick comparison to help you understand the trade-offs.

| Method | Key Characteristic | Best For |

|---|---|---|

| Optimization-Based | Highest artistic quality but slow | Creating high-fidelity digital art when speed is not a priority. |

| Feed-Forward | Extremely fast for a single, pre-trained style | Real-time applications like live video filters and interactive demos. |

| Arbitrary Style | Apply any style with a single model | Flexible apps where users can upload their own style images. |

| Lightweight Mobile | Low memory and resource usage | On-device style transfer for mobile applications. |

As you can see, there’s a constant trade-off between quality, speed, and flexibility. Selecting the right model means knowing which of these factors is most important for your use case.

Real-World Applications Beyond Art Filters

While turning photos into art is a popular use of style transfer AI, its applications extend far beyond personal creativity. This technology is becoming a powerful tool for industries ranging from entertainment to scientific research.

Companies are leveraging its unique visual capabilities to solve practical problems and drive innovation. For instance, a marketing team can use style transfer to apply a consistent brand aesthetic across all its visual assets, ensuring a cohesive and memorable identity.

Innovation in Creative Industries

The film, fashion, and gaming industries have been quick to adopt style transfer AI, integrating it into their creative workflows.

- Film and Animation: Visual effects artists can apply a specific aesthetic—like the look of vintage film or a futuristic sci-fi vibe—to scenes, dramatically speeding up post-production.

- Fashion and Textile Design: Designers can rapidly prototype new fabric patterns. Imagine transferring the texture of a marble sculpture onto a clothing design in seconds.

- Gaming: Developers use style transfer to generate diverse game environments and character skins, creating rich, stylized worlds more efficiently.

Style transfer AI acts as a creative amplifier. It doesn’t replace artistic talent but rather enhances it, allowing professionals to explore and execute visual ideas at an unprecedented speed.

Beyond Art and Entertainment

The technology’s impact is also being felt in more analytical fields. In medicine, style transfer can be used to stylize medical scans to highlight specific tissues or anomalies, potentially helping doctors with faster and more accurate diagnoses.

The fine art market has also embraced this technology. The global AI art market, where style transfer plays a significant role, was valued at $3.2 billion and is projected to reach $40.4 billion by 2033. With over 35% of fine art auctions now including AI-generated works, it’s clear that this tech is reshaping how art is created, sold, and valued. You can learn more about AI on the art market and its future trends.

The Future of Creative Generative AI

Style transfer is a foundational technique within the broader field of generative AI—a revolutionary area of technology that is transforming how we create everything from art and music to code. Understanding style transfer’s place in this ecosystem helps reveal the exciting future of digital creation.

The core principle of separating and recombining data elements is shared across many generative models. Today, this family of technologies has evolved far beyond artistic filters into sophisticated tools that offer incredible realism and creative control.

The Expanding Generative AI Toolkit

While style transfer excels at artistic reimagining, other models are pushing the boundaries of creation in different ways. Two prominent examples are Generative Adversarial Networks (GANs) and Diffusion Models.

Generative Adversarial Networks (GANs): GANs involve two neural networks—a “generator” and a “discriminator”—competing against each other. The generator creates images, while the discriminator tries to identify them as fakes. This competitive process pushes the generator to produce highly realistic, often photorealistic images.

Diffusion Models: These models work by starting with random noise and gradually refining it into a coherent image based on a text prompt. This method gives creators precise control, allowing them to generate highly specific and detailed scenes from simple text descriptions.

These advancements are fueling a new wave of creative tools that are more intuitive and powerful than ever. For a look at how this technology is being applied today, you can find great examples of companies powering diverse generative AI applications.

A Market Poised for Explosive Growth

The excitement around these technologies is backed by significant economic growth. The global Generative AI market, valued at USD 13.5 billion in 2023, is projected to soar to USD 255.8 billion by 2033, with a compound annual growth rate of 34.2%. This indicates a massive shift in creative and business workflows.

The creative platforms of the future will blend different generative AI models, enabling users to mix artistic styles, generate photorealistic elements, and direct complex scenes with simple commands—all within a single, seamless workflow.

This fusion of capabilities is the driving force behind platforms like https://lunabloomai.com/, which aim to democratize advanced video creation. The focus is shifting from static image edits to dynamic, controllable, and deeply personalized content.

FAQs About Style Transfer AI

Here are answers to some of the most common questions about style transfer AI, designed to give you clear and direct information.

What hardware is needed to run style transfer AI?

The hardware you need depends on your use case.

- For casual users: If you’re using web-based tools, any modern computer or smartphone with a stable internet connection will work fine. The processing is done in the cloud.

- For developers and artists: If you plan to train your own models or process high-resolution images locally, a powerful GPU (Graphics Processing Unit) is essential. NVIDIA GPUs are the industry standard, as most AI frameworks are optimized for their CUDA architecture.

Without a capable GPU, processing a single high-quality image can take several minutes instead of seconds, which can disrupt the creative process. For real-time video, a high-end GPU is necessary to avoid lag.

Are there legal or ethical issues with using famous art?

Yes, this is a critical and complex area. Using existing artworks for style transfer, especially for commercial purposes, can raise copyright and intellectual property concerns.

Here’s a simple breakdown:

- Public Domain: Works by artists like Van Gogh or Rembrandt are in the public domain because their copyrights have expired. Using these is generally safe.

- Modern Art: Using art from a living artist or one who died recently (typically within the last 70 years) without permission can lead to copyright infringement.

- Fair Use: While you could argue your work is “transformative” and protected by fair use, this is a legal gray area and can be difficult to defend.

For any commercial project, the safest approach is to use public domain art or obtain explicit permission from the copyright holder.

How is this different from a regular photo filter?

The difference is fundamental. A standard photo filter, like those on social media, is a pre-set overlay. It applies the same uniform adjustments (e.g., changing colors, contrast, or adding grain) to any image.

Style transfer AI is a generative process. It doesn’t just modify your photo; it creates a brand-new image. The AI analyzes the content of your photo and the artistic elements of the style image. It then uses this understanding to repaint your original photo from the ground up in the new style. This is why the results are far more detailed, artistic, and unique than what any simple filter can achieve.

Conclusion

Style transfer AI is more than just a novelty; it’s a powerful technology that stands at the intersection of human creativity and machine intelligence. By deconstructing images into content and style, it opens up a world of visual possibilities, transforming ordinary photos into unique works of art.

As we’ve seen, its applications are already reshaping industries from entertainment to medicine, and it serves as a key building block for the rapidly expanding universe of generative AI. Whether you’re an artist, a developer, or simply curious about the future of creativity, style transfer offers a compelling glimpse into what’s possible when we teach machines to see the world not just as it is, but as it could be.

Ready to bring your own creative visions to life? LunaBloom AI makes it easy to generate stunning, professional-quality videos from simple text inputs. Explore our features and start creating today.